What is it that we want from our data architecture

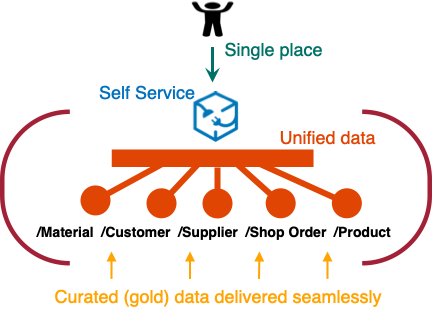

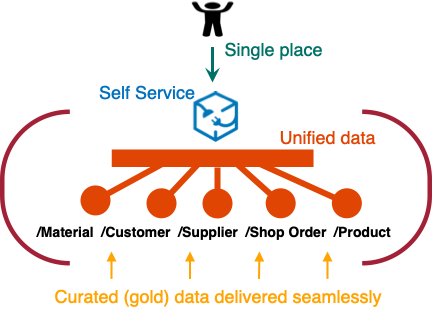

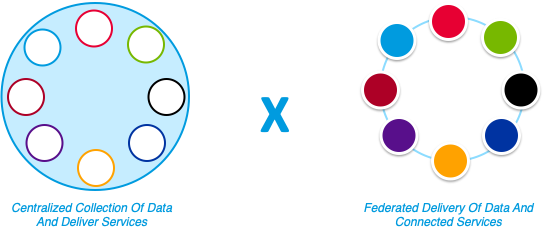

This is the dream: We strive for a unified and usable data and analytic platform

This is the dream: We strive for a unified and usable data and analytic platform

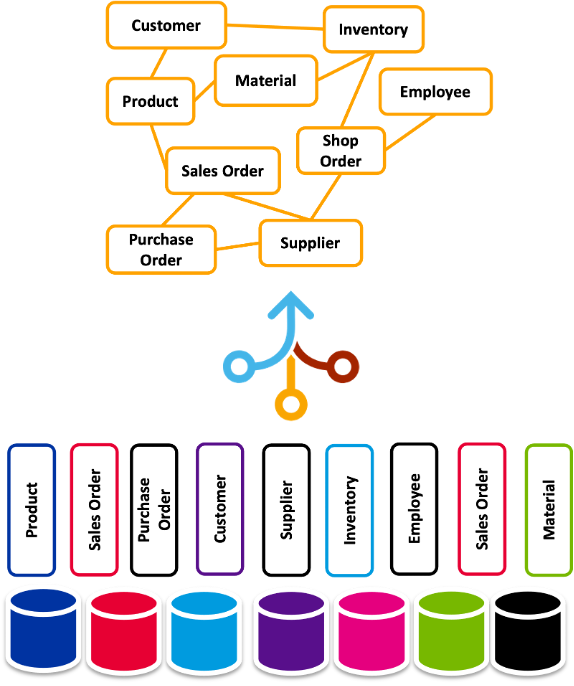

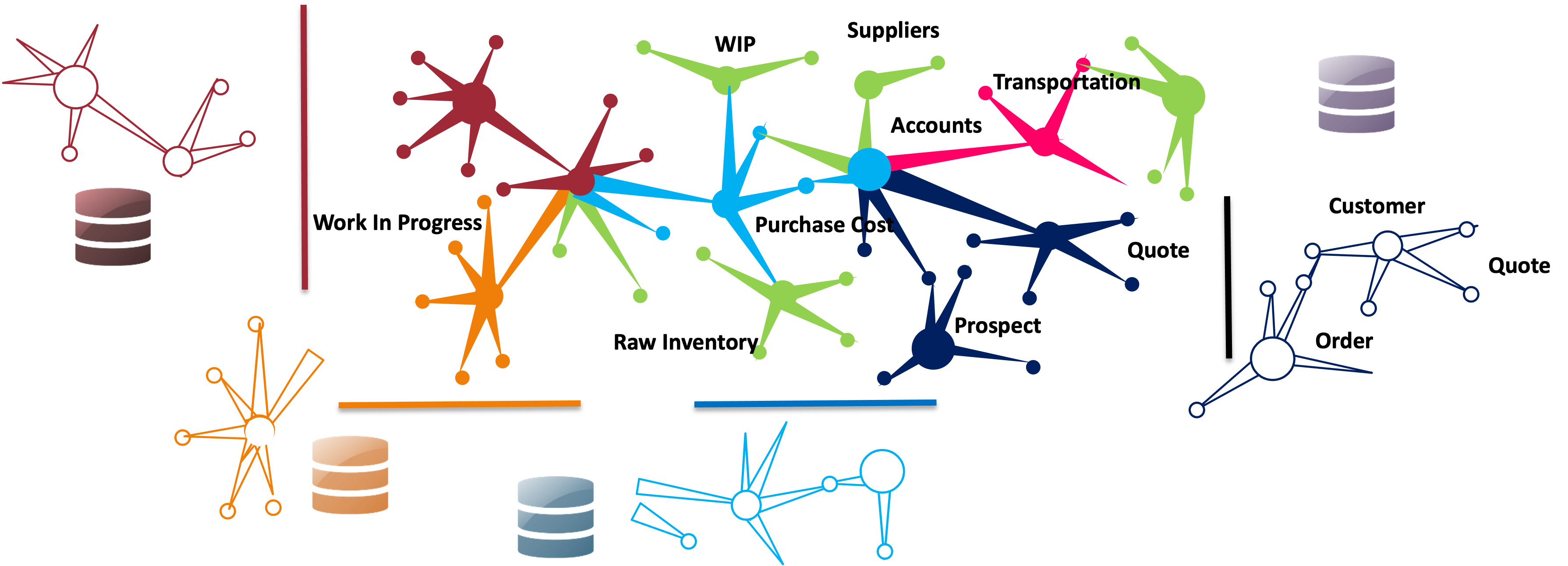

A single, coherent, and consistent view of the data across the organization

Combining data from different sources and providing a unified view

We need data to have an acceptable quality, readily available and easily accessible.

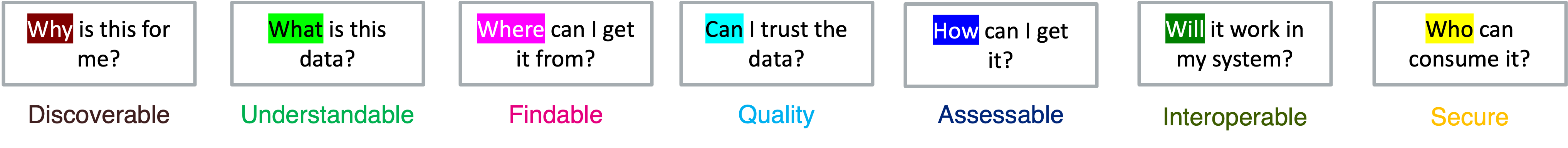

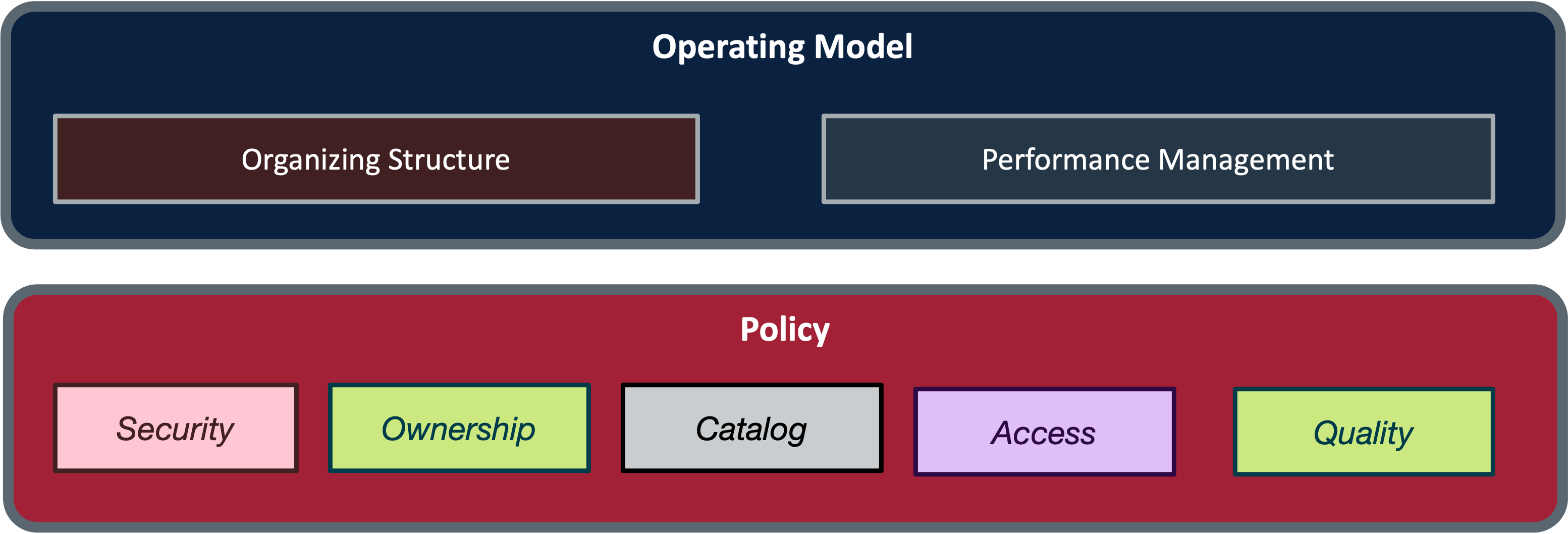

We want the data to be usable. How do we ensure it?

Making Data Useful and Integrated:

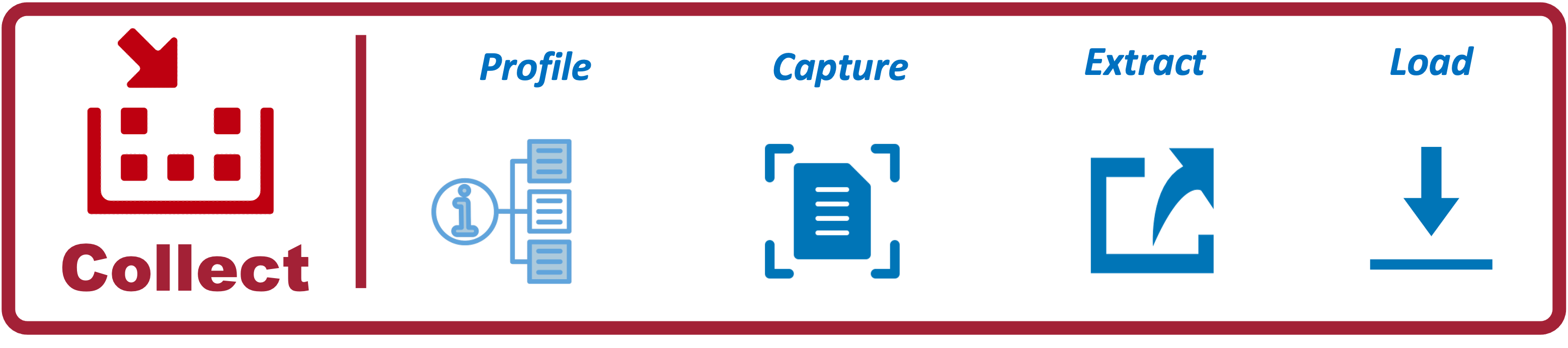

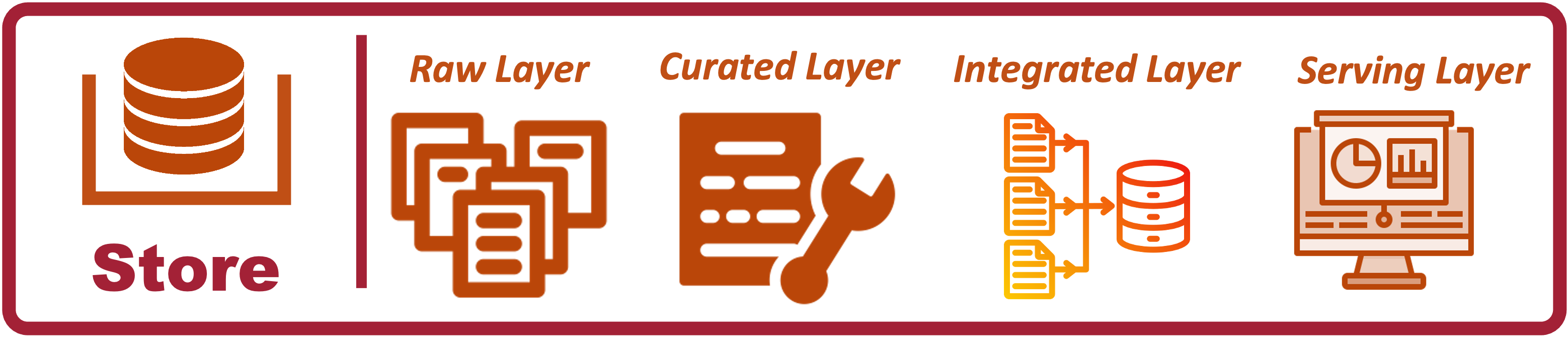

Collect the data for analytics:

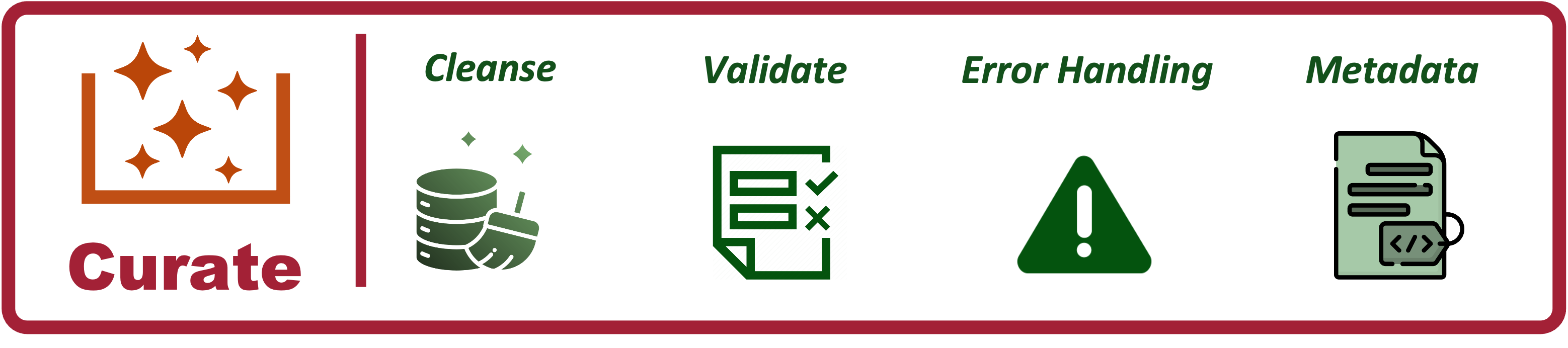

Curate the data for analytics:

Storage for Data Analytics: Coming Soon

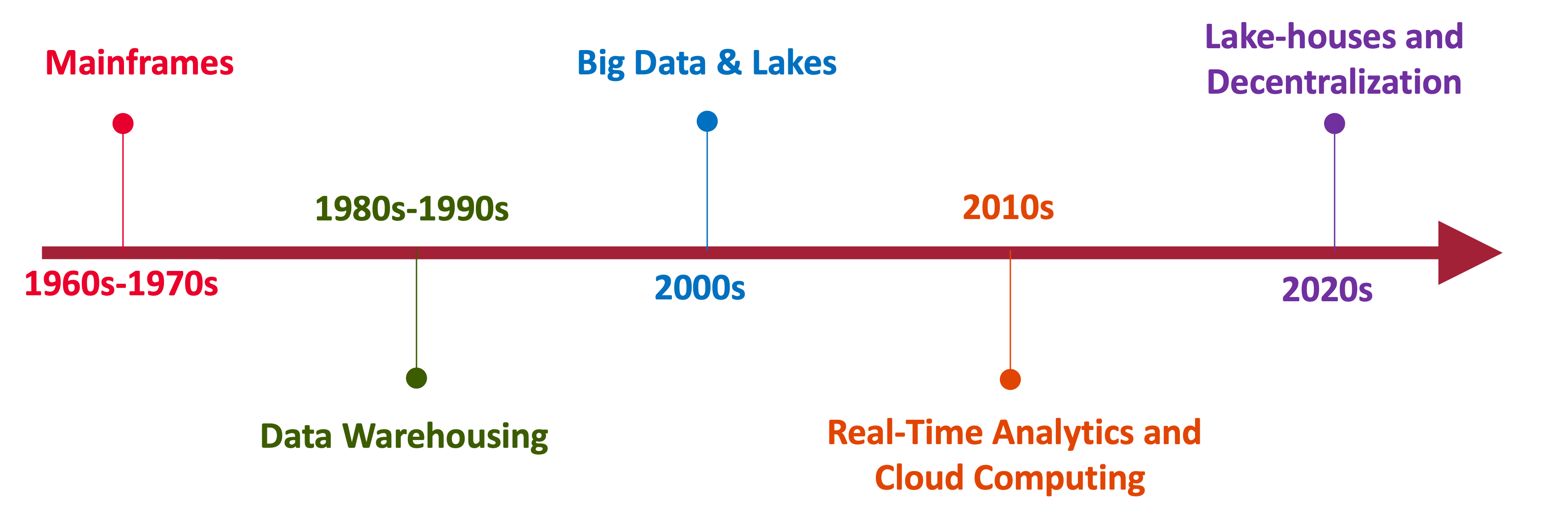

A Brief History

Yesterday, Today and Tomorrow of Data Processing

Published:

What? Enterprise wide consisted data management design. Why? Reduce the time to deliver data integration and interoperability How? Through metadata.

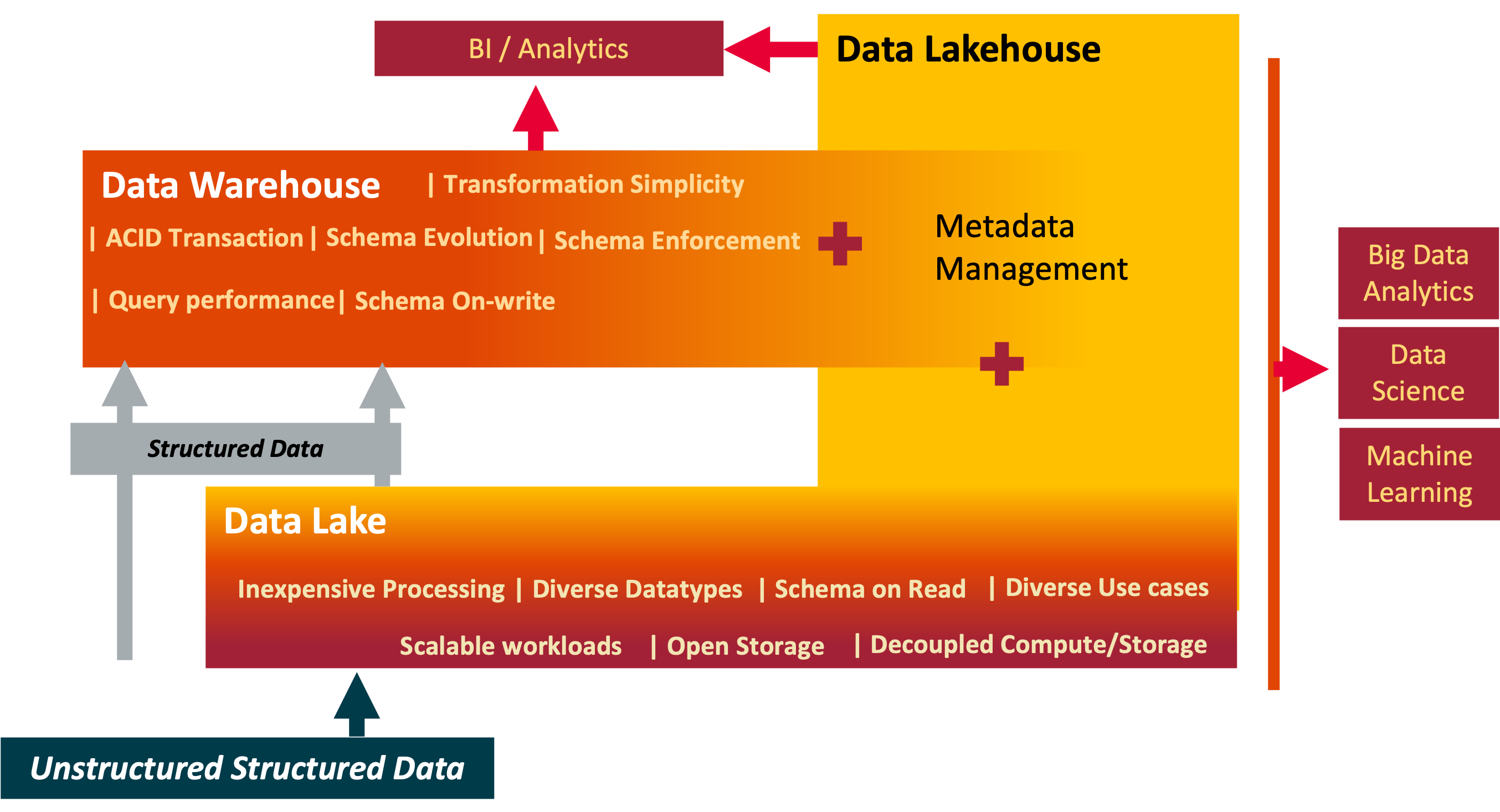

Storage is foundational. The choices are simplified by the maturing technolgies. Providing a technical overview

One Model to rule them all

Published:

Coming Soon

A more effective format that is both simple and truly serves the intent of a policy

Published in Processing, 2024

Batch Transfer: One of the most common scenarios of extraction. For now atleast

Published in Processing, 2024

Move Compresssed data

Published in Processing, 2024

Transfer data from source to taget

Published in Processing, 2024

I do not tend to draw hard lines between applying processing logic directly on the source system before extracting the data or performing transformations post-ingestion in an analytics platform. Both approaches are valid, depending on factors such as data volume, complexity, real-time requirements, and system architecture. However, most modern data scale needs require processing to be done post-ingestion.

Published in Processing, 2024

Data profiling is essential for understanding the quality, structure, and consistency of data

Published in Processing, 2024

Deliver quality data

Published in Product, 2024

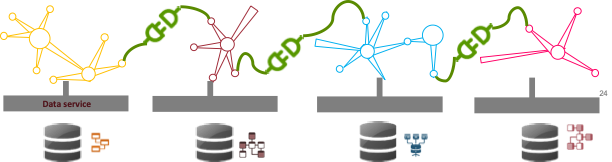

By applying microservice principles, data products can be designed to be modular, scalable, and maintainable, providing greater flexibility and agility in data-driven environments

Published in Data Platform, 2024

Deliver Data as an Organized, Unified and Consistent Product

Published in Processing, 2024

Keep the Raw Layer “Raw”

Published in Processing, 2024

Capture data from source system for processing in an Analytics System

Published in , 1900

Leverage Business Capability Maps for Data Domains

Published in Master Data, 2024

Leverage Business Capability Maps for Data Domains

Published in Governance, 2024

Consistency on what we measure and how we measure data domains. An method with an example scenario

Published:

Some upfront work is required to ensure the success of data engineering projects. I have used this checklist to provide a framework for collaborating with multiple stakeholders to define clear requirements and designs.

Published:

Published:

Published:

A often overlooked feature of Parquet is its support for Interoperability which is key to enterprise data plaforms which serves different tools and systems, facilitating data exchange and integration. This is my take on Parquet best practices and I have used python-pyarrow to demonstrate them.

Published:

Published:

Published:

Published:

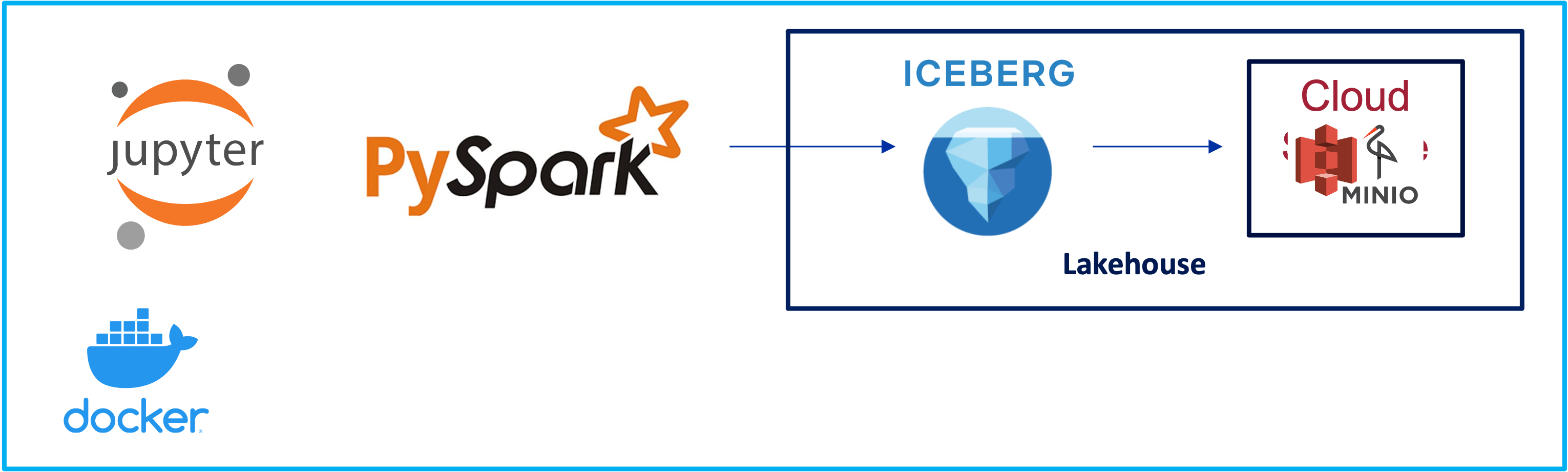

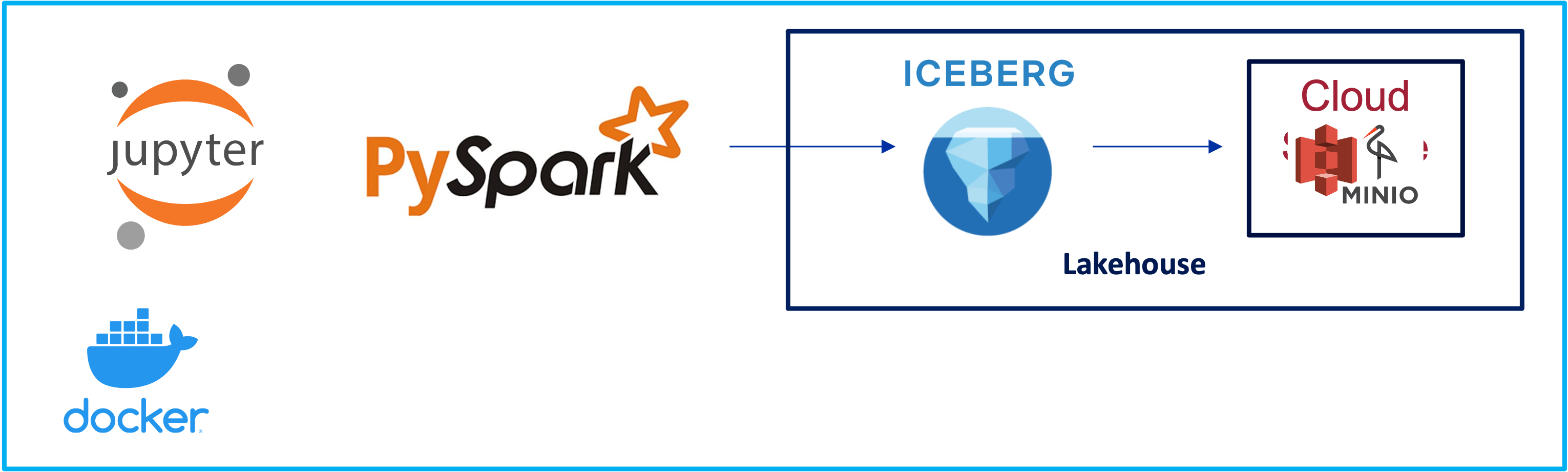

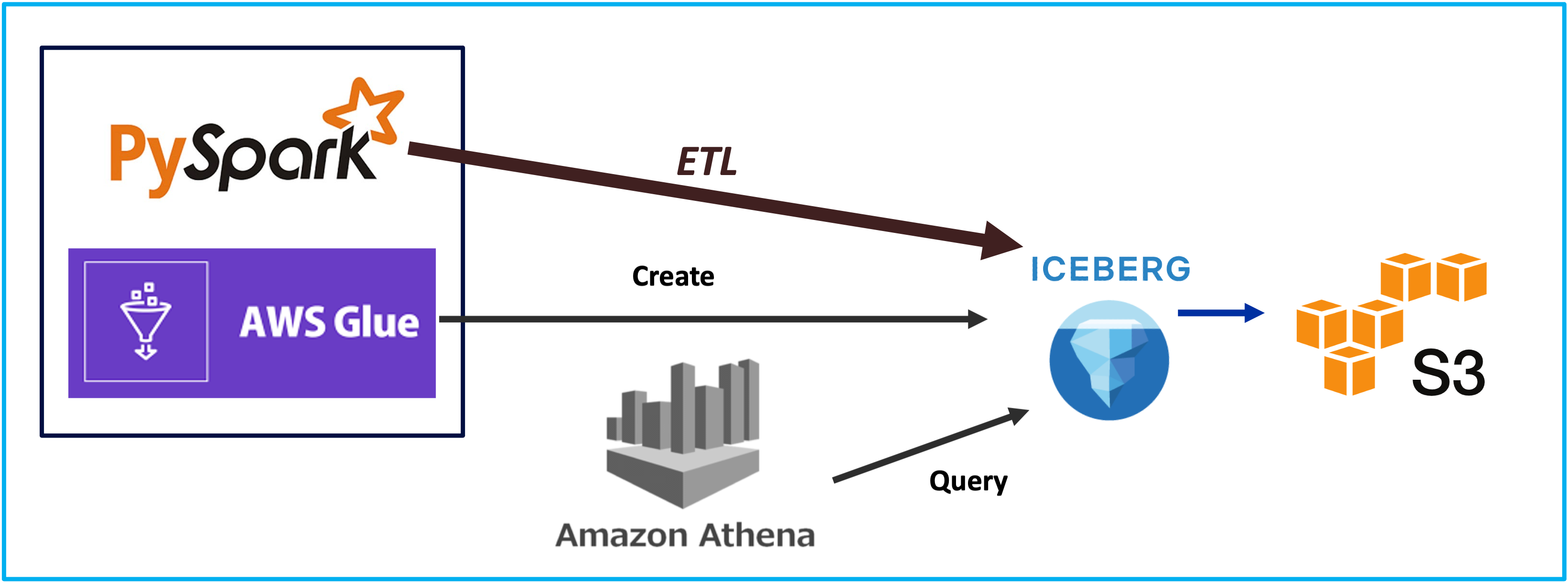

Lakehouse, Iceberg, Spark, 2024

Lakehouse, Iceberg, Spark, 2024

Lakehouse, Minio, 2024

When I play with new technologies, I like to plat it on my machine locally. Minio is a perfect simulation of cloud storage locally. You can deploy it locally and interact it like a S3 object storage.

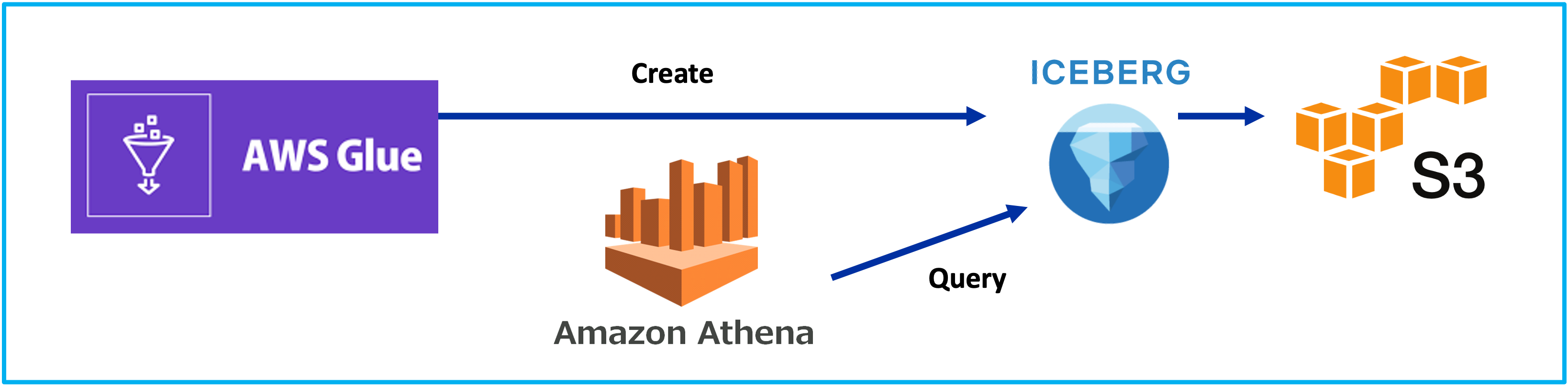

Lakehouse, Glue, 2024

Lakehouse, Iceberg, 2024

Application Service, AWS, 2024

The installables can be found at the aws wbesite:https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/DynamoDBLocal.DownloadingAndRunning.html

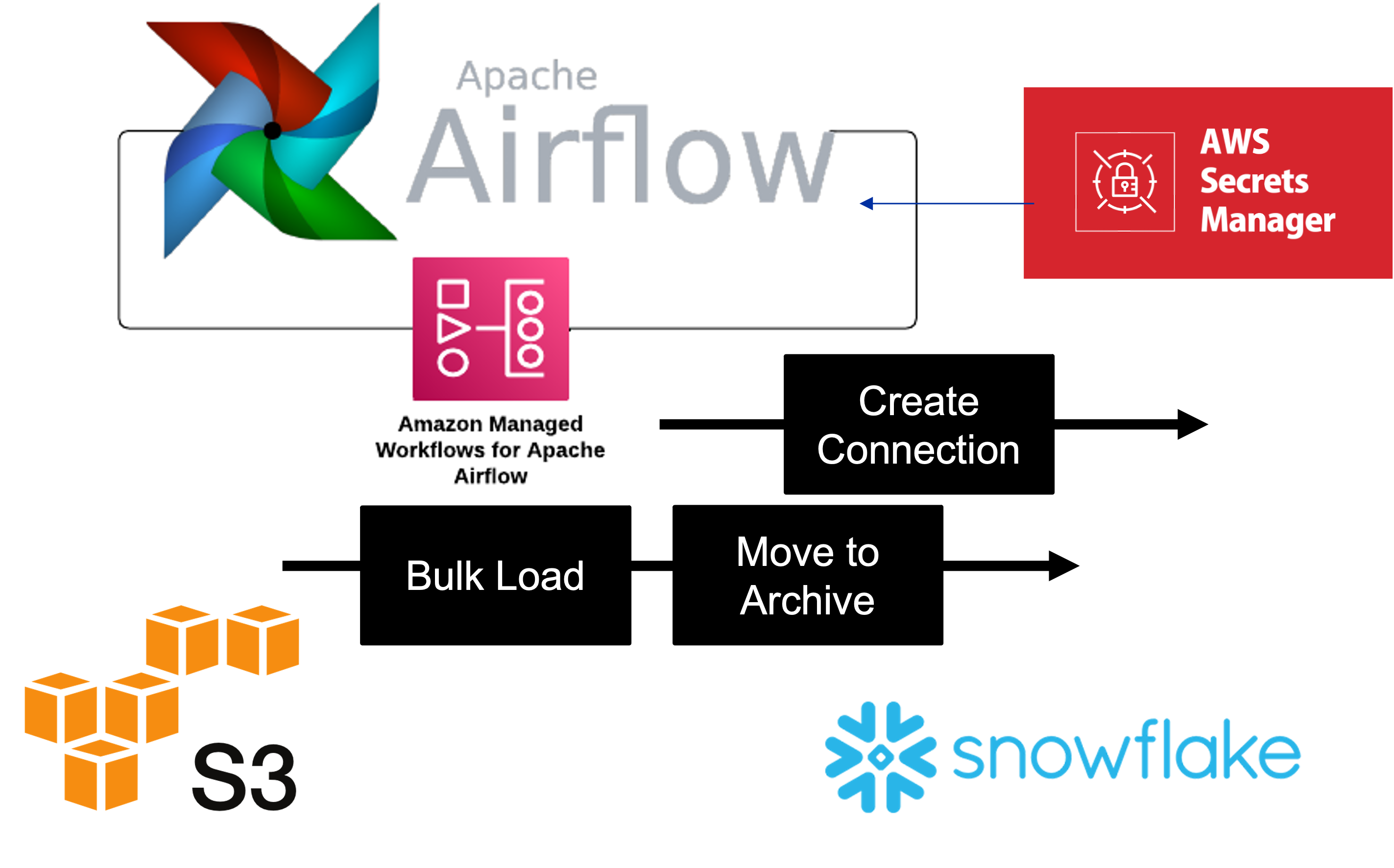

Lakehouse, Airflow, 2024

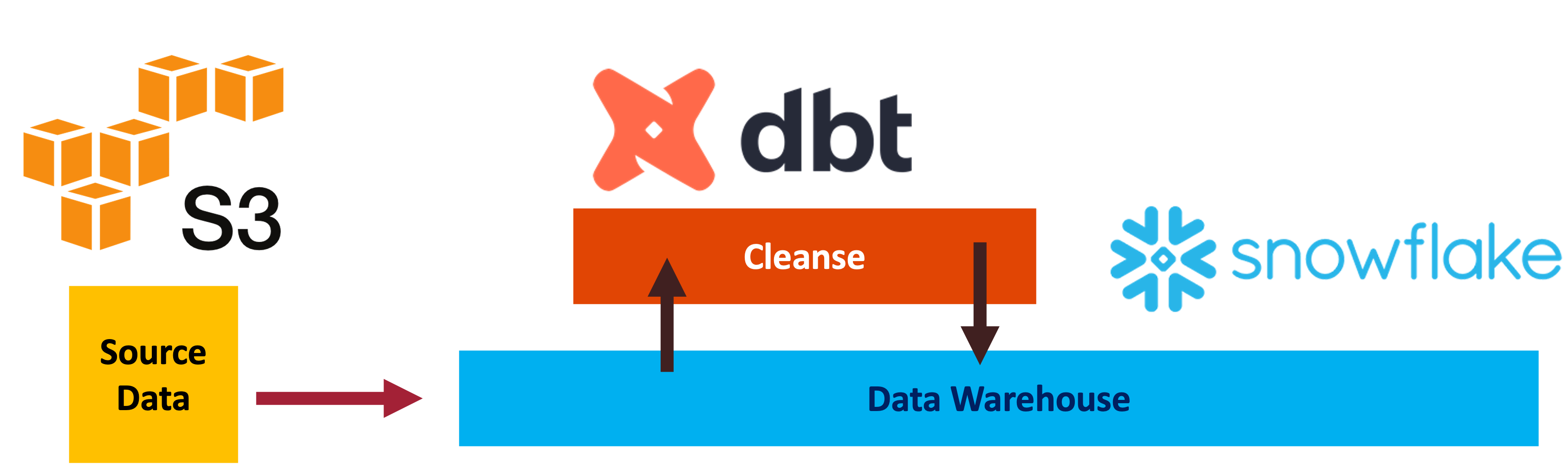

Data Warehouse, Snowflake, 2024

Data Warehouse, Snowflake, 2024

Installed DBT Core locally. The install configuration can be described by the command: dbt debug

Installed DBT Utils

Data Processing, GCP, 2024

Data Quality, Snowflake, 2024

Data Processing, Beam, 2024

Data Processing, Beam, 2024

DataLake, Glue, 2024

Infrastructure, Azure, 2024

Lakehouse, Airflow, 2024

Datawarehouse, S3, 2024

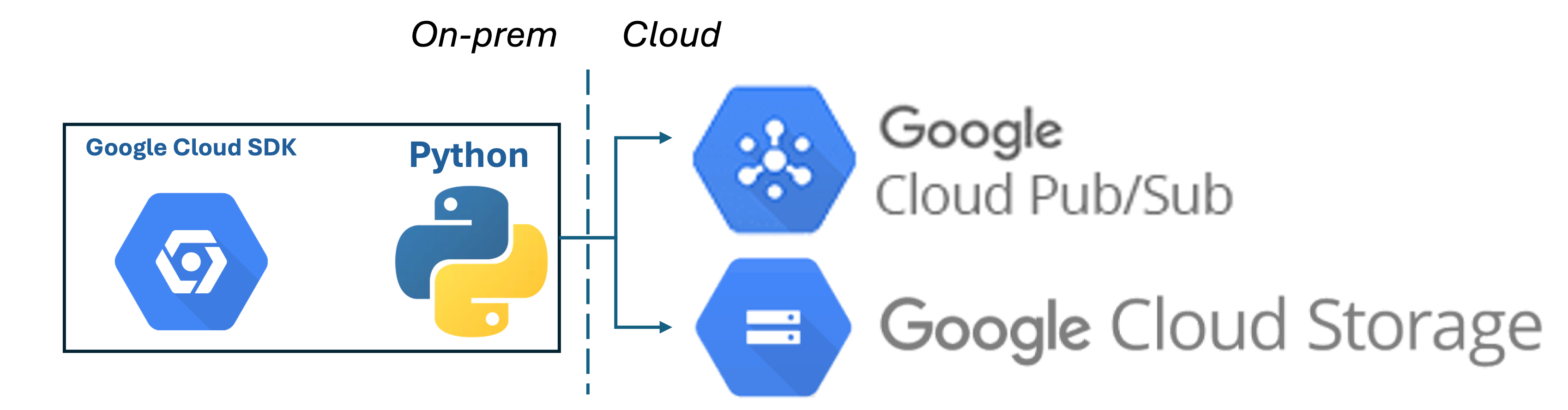

gcloudSDK, GCP, 2024

Monitoring, GCP, 2024

AI/ML, DialogflowCX, 2024

AI/ML, DialogflowCX, 2024

Data Lake, LakeFormation, 2025

AWS Lake Formation = Scaled Data Lake + Scaled Security Provisioning