My Master Data Management Reference Architecture

Published in Master Data, 2024

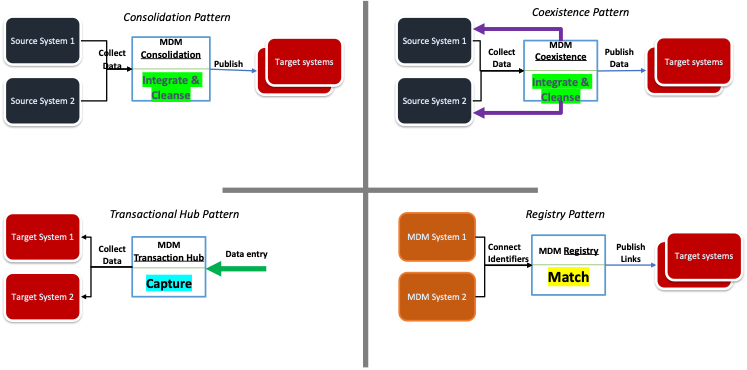

Master Data Management Patterns

Understanding the four main MDM patterns goes a long way toward building a viable architecture for managing master data. Most of the MDM implementations would fall within these four main patterns. There is an seperate article which I have written on this topic.

Master Data as a Product

Addressable

Master data is required to be easily accessible, identifiable, and usable by its intended consumers. Addressability is achieved through well-defined APIs and interfaces that allow users to access and query master data efficiently either real-time or batch ensuring that it can be integrated into applications and analytics platforms seamlessly. Data Catalogs: Providing comprehensive data catalogs with metadata that describe the master data, its structure, and how to access it. APIs and Endpoints: Offering APIs and endpoints that facilitate real-time or batch access to master data,

Trustworthy

Definition: Trustworthy data means that master data is accurate, reliable, and consistent, instilling confidence among its users. Implementation: To ensure data trustworthiness, the Data Mesh approach includes: Data Quality Standards: Establishing and enforcing data quality standards and metrics to maintain accuracy, completeness, and consistency. Governance Policies: Implementing robust data governance policies and practices that include data stewardship, data validation, and regular audits to ensure data integrity. Transparency: Providing transparency into data sources, transformations, and lineage to build trust in the data’s reliability. Discoverable

Definition: Discoverable data means that users can easily find and understand the master data they need. Implementation: Enhancing discoverability involves: Data Cataloging: Maintaining a centralized data catalog that allows users to search for and discover master data across domains. Metadata Management: Ensuring detailed metadata is available to describe the data’s attributes, usage, and context.

Sociable

Definition: Sociable data means that master data is integrated and interoperable with other data products and systems. Implementation: Facilitating data sociability involves: Standardization: Adopting common data standards and protocols to ensure interoperability across different data products and domains. Integration: Implementing data integration practices that allow master data to be seamlessly shared and utilized across various applications and systems.

Evolving

Definition: Evolving data means that master data is continuously improved and updated to meet changing business needs and conditions. Implementation: Supporting data evolution involves: Feedback Loops: Establishing mechanisms for collecting and acting on feedback from data consumers to drive continuous improvements. Agile Practices: Adopting agile methodologies to rapidly iterate on data product features and enhancements based on evolving requirements and business needs. Key Components of Master Data as a Product

Product Ownership

Appoint a Data Product Owner responsible for the master data product. This person ensures that the product meets user needs, aligns with business goals, and adheres to quality standards. Product Roadmap

Develop and maintain a product roadmap that outlines planned features, improvements, and milestones for the master data product. This roadmap guides the development and enhancement of the master data. Data Governance

Implement a governance framework that defines policies, standards, and procedures for managing master data. This includes data stewardship, data quality management, and compliance with regulatory requirements. User Experience

Design user-friendly interfaces and tools for accessing and interacting with master data. Ensure that data consumers have a positive experience when using the master data product. Documentation and Support

Provide comprehensive documentation and support resources for the master data product. This includes user guides, API documentation, and training materials. Data Lifecycle Management

Manage the entire lifecycle of master data, including creation, maintenance, updates, and archival. Ensure that data remains accurate, relevant, and accessible throughout its lifecycle. By applying the principles of Data as a Product within the Data Mesh framework, organizations can effectively manage and deliver master data in a way that maximizes its value, ensures its quality, and meets the needs of its consumers. This approach fosters a more accountable, responsive, and user-centric approach to master data management.

Data Storage and Management

he central repository that stores master data and serves as the authoritative source. It supports data storage, retrieval, and management of master data entities. Data Warehousing: A system for aggregating and storing large volumes of data from various sources, often used in conjunction with MDM to provide historical data and support analytics.

Data Integration ETL/ELT Processes: Extract, Transform, Load (ETL) or Extract, Load, Transform (ELT) processes are essential for integrating data from various source systems into the MDM hub. These processes handle data extraction from different sources, transformation to match MDM standards, and loading into the MDM repository. Data Connectors and APIs: Connectors and APIs facilitate real-time or batch integration with source systems, enabling data exchange and synchronization between MDM and other systems.

- Data Quality Management Data Cleansing: Processes for cleaning and standardizing data to correct inaccuracies, remove duplicates, and ensure consistency. This includes parsing, normalization, and validation of data. Data Profiling: Analyzing data to understand its structure, content, and quality. This helps in identifying data quality issues and assessing the completeness and accuracy of master data.

- Data Matching and Merging Deduplication: Identifying and removing duplicate records to ensure a single version of the truth. This involves algorithms and rules for matching records based on similarities and discrepancies. Record Linkage: Linking records from different sources that refer to the same entity. This requires matching algorithms and data linking techniques to consolidate information. 4.

- Data Governance and Metadata Management Governance Policies: Defining and enforcing rules and policies for data quality, security, and compliance. This includes access controls, data ownership, and data stewardship roles. Metadata Management: Managing metadata that describes the structure, definitions, and relationships of master data. Metadata management supports data lineage, data cataloging, and documentation.

- Data Lineage and Auditing Data Lineage: Tracking the flow of data from its origin to its final destination. This provides visibility into data transformations, dependencies, and usage. Data Auditing: Monitoring and recording data changes and access to ensure compliance and traceability. Auditing helps in identifying data issues and maintaining data integrity.

- Data Synchronization Real-Time Data Synchronization: Ensuring that changes in master data are reflected in real-time across integrated systems. This involves mechanisms for near-real-time data updates and synchronization. Batch Processing: Handling periodic updates and synchronization of master data through batch processing jobs. This is typically used for large-scale data integration tasks.

- Data Security and Privacy Data Encryption: Protecting master data through encryption to ensure confidentiality and prevent unauthorized access. Access Controls: Implementing role-based access controls (RBAC) and permissions to manage who can view, modify, or delete master data.

- Data Modeling and Schema Design Canonical Data Models: Designing data models that define the standard structure and attributes of master data entities. This ensures consistency and interoperability across systems. Schema Design: Designing schemas for the MDM hub and data integration processes, including tables, indexes, and relationships.

- Data Visualization and Reporting Dashboards and Reports: Creating visualizations and reports to monitor master data quality, integration status, and governance metrics. This helps stakeholders make informed decisions based on master data insights.

- Self-Service Tools Data Access and Querying: Providing tools for users to access, query, and interact with master data without needing extensive technical expertise. This includes self-service BI and data discovery tools. Data Management Interfaces: Offering interfaces for data stewards and users to manage and govern master data, including data entry, validation, and corrections.